ICCV2021 Competition

We have 2 challenge tasks in CAMP2021 competition.

Sample code of dataloader

You can use sample scripts for preparing for competition.

Important dates

Evaluation page open: September 8, 2021

Evaluation page close: October 7, 2021

Report submission deadline: October 12, 2021

Workshop: October 17, 2021

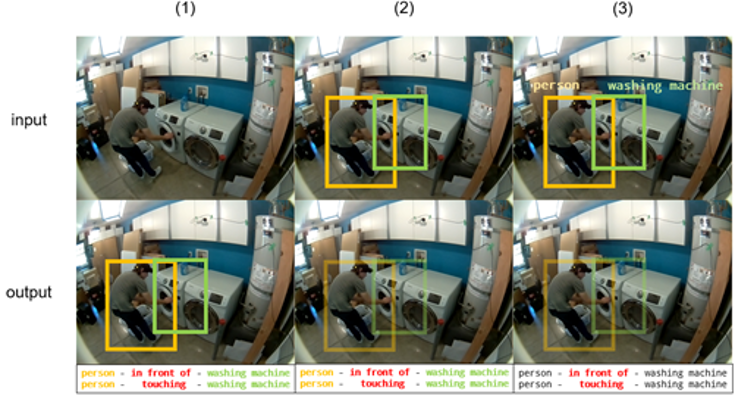

Challenge #1: Scene-graph Generation

About

We use scene graphs to describe the relationship between a person and the object used during the execution of an action. In this track, the algorithms need to predict per-frame scene graphs, including how they change as the video progresses. For this track, participants are also allowed to leverage audio information. External datasets for pre-training are allowed, but it needs to be clearly documented. Since there are multiple relationships between each pair of human and object, there is no graph constraint (or single-relationship constraint).

Evaluation Metric

For evaluation of scene graph prediction, we use the evaluation metric as Scene graph classification (SGCLS).

The task is to predict object categories and predicate labels between the person and each object.

Participants can use input information as video, other modalities and ground truth boxes.

Evaluation metrics is recall@k, we compute the fraction of times the ground truth relationship triplets are predicted in the top k most confident relationships predictions in each tested frame.

We will use k=10, 20.

Evaluation code and submission Format

Here is the evaluation code for task1.

Challenge #2: Privacy Concerned Activity Recognition

About

Privacy-sensitive recognition method is very important for practical application. This task is Video-level activity recognition, but in specially, input videos are blurred, look like recorded with a multi-pinhole camera. In training models, participants can use unblurred images, but test data has only blurred images. External datasets for pre-training are allowed, but it needs to be clearly documented.

Evaluation Metric

This task is video understanding without a clear video image. Participants predict k activity labels for each video, and each candidate predicted label is checked whether or not it is consistent with ground truth activity label to calculate scores.

We will use k=1 and k=5 and evaluate recognition methods with the average of these two scores.

Evaluation code and submission Format

Here is the evaluation code for task2.